| 10:30 |

Logics and Verification

Simon Docherty

In recent years the key principles behind Separation Logic have been generalized to generate formalisms for a number of verification tasks in program analysis via the formulation of 'non-standard' models utilizing notions of separation distinct from heap disjointness. These models can typically be characterized by a separation theory, a collection of first-order axioms in the signature of the model's underlying ordered monoid. While all separation theories are interpreted by models that instantiate a common mathematical structure, many are undefinable in Separation Logic itself and determine different classes of valid formulae, leading to incompleteness for existing proof systems.

Generalizing systems utilized in the proof theory of bunched logics (the underlying propositional basis of Separation Logic), we propose a framework of tableaux calculi that are generically extendable by rules corresponding to coherent formulae. All separation theories in the literature --- as well as axioms for a number of related formalisms appropriate for reasoning about complex systems, security, and concurrency --- can be presented as coherent formulae, and so this framework yields proof systems suitable for the zoo of separation logics and a range of other logics with applications across computer science. Parametric soundness and completeness of the framework is proved by a novel representation of tableaux systems as coherent theories, suggesting a strategy for implementation and a tentative first step towards a new logical framework for non-classical logics.

(Joint work with David Pym)

Andrew Lewis-Smith

We investigate intermediate logics that retain a weak form of contraction. Whereas intermediate logics are generally constructive and well-understood proof-theoretically, the same cannot be said for logics with restricted contraction. This is partly because such systems have a rich semantic motivation, being many-valued or "fuzzy." The result is that the majority of work in such logics focus on algebraic and semantic aspects, downplaying questions of proof. Indeed, the lack of a sufficiently worked-out proof theory is even worse in the case of so-called intermediate logics with fuzzy semantics. Generalized Basic Logic (GBL) is one such logic, extending the Basic Logic (BL) of Hajek by adding pre-linearity to the axioms. We have succeeded in extending an algebraic semantics of Urquhart to GBL ( from Hajek's BL), have proven soundness for BL under this semantics, and are currently working on the completeness result. We have identified a connection with Kripke frames in the work of Bova-Montagna which could help simplify the existent approaches to fuzzy logic as it extends Kripke frames to the case of unit-interval. In the fullness of time we intend to algebraically characterize the relation between BM-frames, Kripke frames, and Totally-ordered commutative monoids.

Gleifer Vaz Alves

Autonomous Systems (AS) are largely applied in critical and reliable systems, like Autonomous Vehicles (AV), Aircrafts, Robots, and so on. Thus, one should be concerned with formal verification techniques in order to properly check whether the AS is working as designed. Model Checking can be applied for AS, but when we have an AS with properties and behaviour which are constantly changing. We shall take into account the Model Checking Program technique, where one could verify the own code of an AS.

An AS can be represented by means of an intelligent agent (or multiagents). We may describe the behaviour of an AS through agent believes, plans and goals (within the so-called BDI agent architecture).

Now it is necessary to formally verify our intelligent agent. This can be done with the MCAPL (Model Checking Agent Programming Language) framework [1]. With MCAPL one may write agent code with an agent programming language, for instance, Gwendolen, then formally verify it with AJPF (Agent Java Path Finder) where one should write formal specification using a formal language based on temporal logic.

With this in mind, we aim to apply the MCAPL framework towards the formal verification of AV (e.g., cars, underwater vehicles). In a previous work [2], we have initially established the SAE (Simulate Automotive Environment), which is used to formally verify a simple intelligent agent controlling the basic behaviour of an autonomous car. In [2], we have written formal specifications with PSL (Property Specification Language), which is the formal language of AJPF where one can write specifications using temporal logic operators.

owever, in order to properly specify the behaviour of an AS, we could extend PSL language with deontic logic operators. By using deontic operators it would be possible to formally specify scenarios where the behaviour of our intelligent agent could be allowed or forbidden. For instance, a given autonomous car is forbidden to cross a red signal light (on a junction), unless the agent believes there is an emergency situation, then it necessary to change the agent behaviour from forbidden to allowed.

As a result, our goal is to define a temporal-deontic based logic that can be used to formally specify properties within the MCAPL framework.

[1] L. Dennis, M. Fisher, M. Webster, and R. Bordini, Model checking agent programming languages, Automated Software Engineering, vol. 19, no. 1, pp. 5-63, 2012.

[2] Fernandes, L. E. R.; Custodio, V.; Alves, G. V.; Fisher, M. A Rational Agent Controlling an Autonomous Vehicle: Implementation and Formal Verification. In Proceedings First Workshop on Formal Verification of Autonomous Vehicles, Turin, Italy, 19th September 2017, edited by Lukas Bulwahn, Maryam Kamali, and Sven Linker, 257:35?42. Electronic Proceedings in Theoretical Computer Science. Open Publishing Association, 2017. https://doi.org/10.4204/EPTCS.257.5.

Tobias Rosenberger

Software services, deployed on various machines, in the cloud, Internet of Things devices, etc and cooperating to perform their various tasks, play an increasingly important role in modern economy. In many cases, incorrect behaviour of these services would put lives, health, property or privacy at risk, be it because they handle money, handle confidential data, control vehicles, exchange records of prescribed or performed medical treatments, etc.

It is obviously desirable to ensure certain safety and security properties of these software services in a way suitable to and taking advantage of their distinct characteristics. We will explore what the characteristic properties of services are and sketch how these inform a tool-supported development process for formally verified software that is tailored to systems composed of services.

|

| 15:15 |

Distributed Systems

Gary Bennett

In a distributed system there are times where a single entity temporarily acts as a coordinator, controlling all of the other entities in the system during the execution of a task. The need for a coordinator arises where having one simplifies the solution or because it is intrinsic to the problem. The classic problem of choosing such a coordinator is known as Leader Election. In this short talk, we present a brief historical overview of Leader Election as well as some insights from our ongoing research, investigating asynchronous (and possibly self-stabilising) Leader Election algorithms derived from, recently published research, "On the Complexity of Universal Leader Election" [JACM 2015].

Radu Ștefan Mincu

In a multi-channel Wireless Mesh Networks (WMN), each node is able to use multiple non-overlapping frequency channels. Raniwala et al. (Mobile Computing and Communications Review 2004, INFOCOM 2005) propose and study several such architectures in which a computer can have multiple network interface cards. These architectures are modeled as a graph problem named maximum edge q-coloring and studied in several papers by Feng et. al (TAMC 2007), Adamazek and Popa (ISAAC 2010, Journal of Discrete Algorithms 2016). Later on Larjomaa and Popa (IWOCA 2014, Journal of Graph Algorithms and Applications 2015) define and study an alternative variant, named the min-max edge q-coloring.

The above mentioned graph problems, namely the maximum edge q-coloring and the min-max edge q-coloring are studied mainly from the theoretical perspective. In this paper, we study the min-max edge q-coloring problem from a practical perspective. More precisely, we introduce, implement and test three heuristic approximation algorithms for the min-max edge q-coloring problem. These algorithms are based on local search methods like basic hill climbing, simulated annealing and tabu search techniques. Although several algorithms for particular graph classes were proposed by Larjomaa and Popa (e.g., trees, planar graphs, cliques, bi-cliques, hypergraphs), we design the first algorithms for general graphs.

We study and compare the running data for all three algorithms on Unit Disk Graphs, as well as some graphs from the DIMACS vertex coloring benchmark dataset.

This is joint work with Alexandru Popa.

|

| 16:45 |

Biological Systems

Hanadi Alkhudhayr

Boolean networks are a widely used qualitative modelling approach which allows the abstract description of a biological system. One issue with the application of Boolean networks is the state space explosion problem which limits the applicability of the approach to large realistic systems. In this paper we investigate developing a compositional framework for Boolean networks to facilitate the construction and analysis of large scale models. The compositional approach we present is based on merging entities between Boolean networks using conjunction and we introduce the notion of compatibility which formalises the preservation of behaviour under composition. We investigate characterising compatibility and develop a notion of trace alignment which is sufficient to ensure compatibility. The compositional framework developed is supported by a prototype tool that automates composition and analysis.

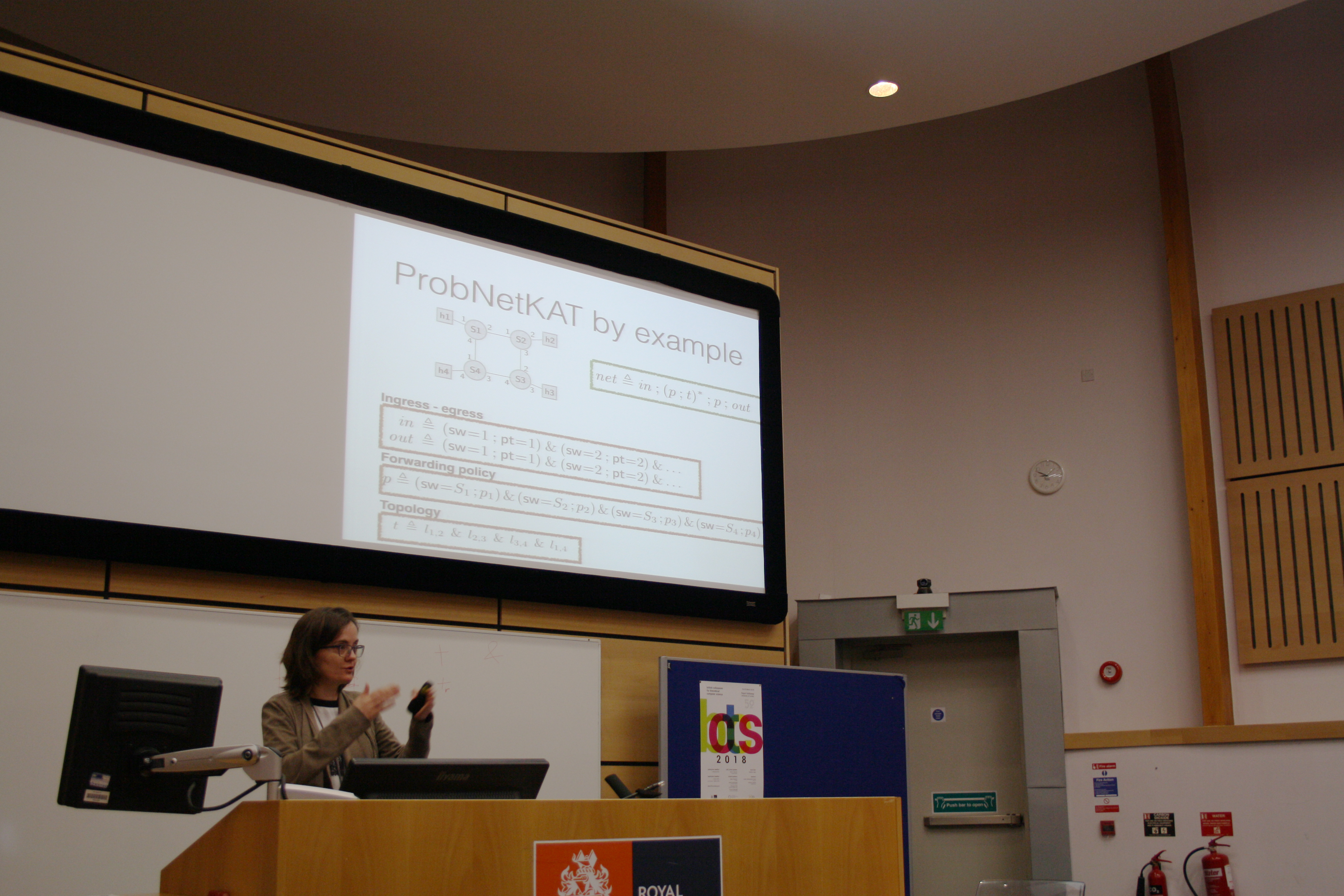

Thomas Wright

We present the bond-calculus, a process algebra for modelling biological and chemical systems featuring nonlinear dynamics, multiway interactions, and dynamic bonding of agents. Mathematical models based on differential equations have been instrumental in modelling and understanding instrumental in understanding the dynamics of biological systems. Quantitative process algebras aim to build higher level descriptions of biological systems, capturing the agents and interactions underlying their behaviour, and can be compiled down to a range of lower level mathematical models. The bond-calculus b! uilds upon the work of Kwiatkowski, Banks, and Stark's continuous pi-calculus by adding a flexible multiway communication operation based on pattern matching and general kinetic laws. The language has a compositional semantics based on vector fields and linear operators, allowing simulation and analysis through extraction of differential equations. We apply our framework to V. A. Kuznetsov's classic model of immune response to tumour growth, demonstrating the key features of our language, whilst validating the link between the dynamics of the model and our conceptual understanding of immune action and capturing the behaviour of the agents in a modular and extensible manner.

Rayan Alnamnakany

An organism is made of a number of components, including RNA and protein. Recent studies show that the post-transcriptional level is critically regulated by a number of transcript-factors, including miRNAs and RBPs. The expression of a gene mainly depends on the interaction between RBPs and miRNAs RNA-binding proteins and micro-RNA are considered as two of the important factors in regulating the expression of genes. RBPs and MicroRNAs have been involved in a number of human diseases, such as cancers. Identifying RNA-protein interactions have become one of the central questions in biomedical research as understating this mechanism may give the chance to design new medicines that cure diseases. These two factors can work competitively or cooperatively between each other, and either indirectly or directly in order to adjust the expression of their target mRNAs. The brand new topic emerges of designing algorithms and software tools that account for the interplay between microRNA and RBPs in post-transcriptional regulation. At present, there is only a single Bioinformatics tool, namely SimiRa that addresses the issue of microRNA RBP cooperation. The tool has been made public only a couple of months ago. While SimiRa relies on gene-expression data analysis and pathway features, the proposed research aims at the performance analysis of different Machine Learning Methods in regard to the prediction of mRNA regulation by microRNAs and RBPs. the proposed research will focus on structural elements, i.e., features of microRNA binding sites as well as RBP binding sites in secondary RNA structures, including meta-stable RNA secondary structures.

|